GPT-4 Turbo with 128K context !! AI Models and Developer Products at OpenAI's DevDay

OpenAI Announcements Set New Benchmarks for Developers 📢✨ DevDay at OpenAI was full of captivating revelations. Developers across the globe were presented with a treasure trove of new features, elevated capabilities, and significant price deductions on the platform. Among the major highlights were:

- GPT-4 Turbo's enhanced capability and affordability, and its support for a 128k context window.

- The launch of the Assistants API - a major leap forward in assisting AI app developers.

- The expanded multimodal capabilities of the platform that now include vision, image creation with DALL·E 3, and text-to-speech (TTS).

GPT-4 Turbo with 128K Context: A New Era of Capacity and Affordability 🚀💸

Following the successful launch of the first GPT-4 version, OpenAI is now releasing a preview of the next generational model, GPT-4 Turbo. This model is designed to deliver superior performance at a lower cost and can manage the context equivalent to more than 300 pages in a single prompt thanks to its extensive 128k context window.

Function Calling Updates: Streamlining AI Integration 🔄🔀

Function calling will undergo several enhancements, including the capability for multiple function calls in a single message, eliminating the need for multiple interactions with the model.

Improved Instruction Following and JSON Mode ✔📁

GPT-4 Turbo is significantly better at following instructions meticulously, thereby enabling the generation of specific formats with ease. It also introduces a new JSON mode that guarantees valid JSON responses from the model.

Achieving Reproducible Outputs and Log Probabilities 🔁📊

The seed parameter ensures most of the time, the model generates reproducible outputs, an asset in debugging, unit testing, and maintaining control over model behavior.

Unveiling Updated GPT-3.5 Turbo 🔄📈

A new version of GPT-3.5 Turbo is launched with a default 16K context window, improved instruction following, JSON mode, and parallel function calling.

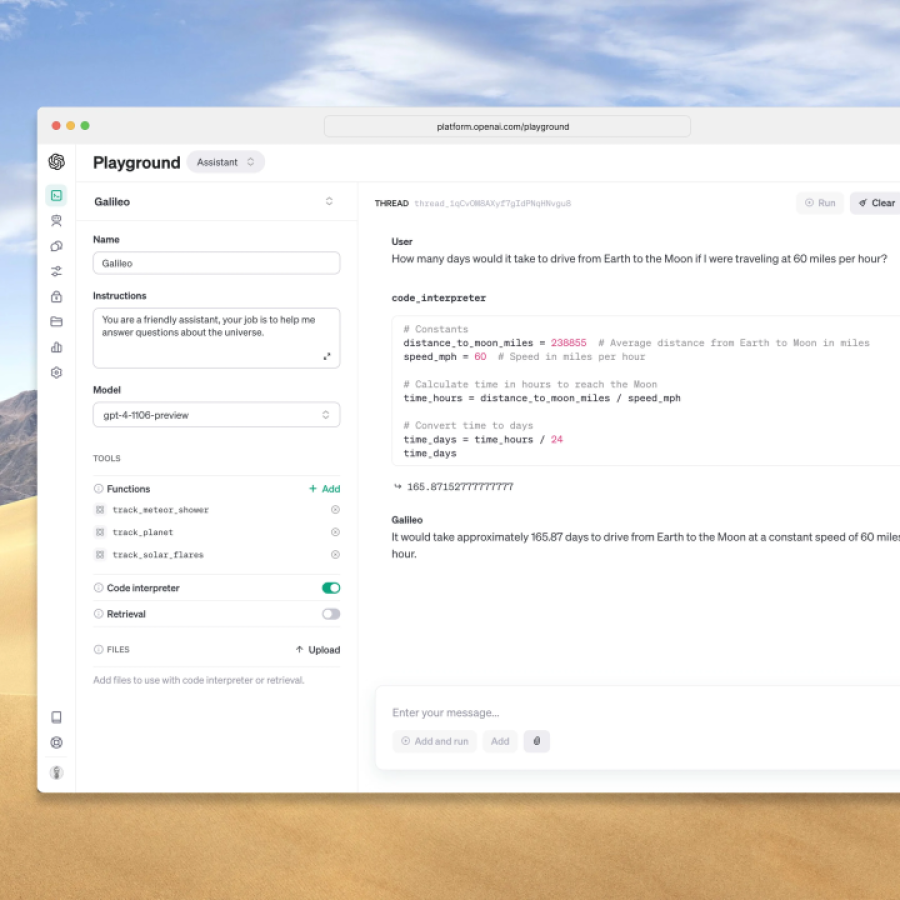

Assistants API: Empowering Developers to Create Innovative AI Apps 👨💻🆕

The Assistants API helps developers create agent-like experiences with access to the Code Interpreter, Retrieval, and function calling.

Additional Modalities: Vision, Image Creation, and Text to Speech 👁🎨🗣

GPT-4 Turbo now accepts images as input enabling unique applications like generating captions. DALL·E 3, capable of programmatically generating images, has been directly incorporated into the OpenAI API. The text-to-speech API provides developers access to human-like speech synthesis.

Lower Pricing and Increased Rate Limits 💵⏱

To make the platform more accessible and user-friendly, several prices across the platform have been reduced. Also, the token per minute limit for all paying GPT-4 customers has been doubled to scale up applications.

💡💼 OpenAI's DevDay was a landmark in the field of AI development introducing an array of tools, improvements, and cost-effective measures to augment the capabilities of AI developers globally. Enhancements in multimodal capabilities, introduction of the Assistant API, and lowered prices reflect OpenAI's commitment to advance the field of AI development.

GPT-4 Fine Tuning Experimental Access and Custom Models

OpenAI is venturing into unexplored territory by introducing experimental access to GPT-4 fine-tuning. Though the preliminary results show that the strides in fine-tuning GPT-4 aren’t as substantial as with GPT-3.5, the quality and safety of GPT-4 fine-tuning will be greatly ameliorated as the focus stays on meaningful improvements. Alongside this, OpenAI is launching a Custom Models program dedicatedly aimed at organizations with giant proprietary datasets — a minimum of billions of tokens.

Whisper v3 and Consistency Decoder

As part of its open-source initiatives, OpenAI is releasing Whisper large-v3, an upgraded version of the automatic speech recognition (ASR) model. This new model boasts better performance across languages. Also, OpenAI plans to support Whisper v3 in its API soon. Following this release, OpenAI is also open-sourcing the Consistency Decoder, a valuable replacement for the Stable Diffusion VAE decoder, intended to enhance the quality of image generation.

Copyright Shield

Understanding the importance of protecting their customers' interests, OpenAI has introduced the Copyright Shield. This novel offer by OpenAI will defend and cover costs for its customers if they face legal claims regarding copyright infringement. The measure covers all generally available features of ChatGPT Enterprise as well as the developer platform.

Revolutionizing the AI Ecosystem

The OpenAI DevDay served as a beacon of promise for the future of AI. By unveiling groundbreaking features like the Assistants API, GPT-4 Turbo, and multimodal capabilities, while also attending to the all-important aspects of customer interests and affordability, OpenAI has set a new benchmark in the evolving landscape of AI. The upcoming enhancements and anticipated rollouts are sure to keep developers, AI enthusiasts, and businesses eagerly looking forward to OpenAI's next big move in the AI domain.

You want to try it ? try it now !

Source : https://openai.com/blog/new-models-and-developer-products-announced-at-devday

Comments (0)